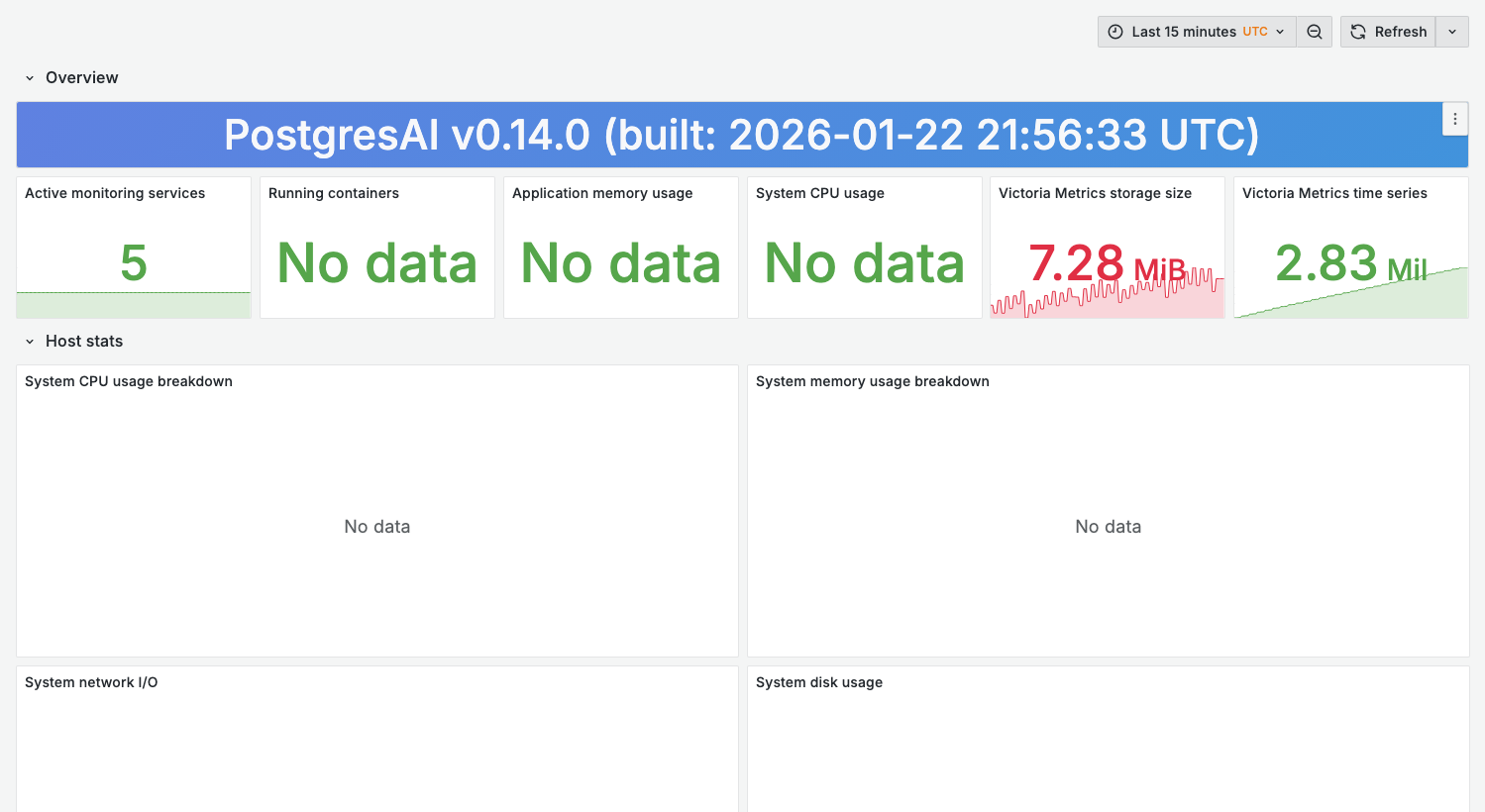

Self-monitoring dashboard

Monitor the health of the monitoring stack itself.

The screenshot shows a containerized demo environment. Some host-level panels (CPU, memory, disk, network) require node_exporter or cAdvisor with Docker socket access. In production environments with proper host metrics collection, all panels display data.

Purpose

Ensure the monitoring infrastructure is functioning correctly:

- Metrics collection is working

- Storage has capacity

- No data gaps

- Alert pipeline is healthy

When to use

- Regular monitoring stack health checks

- After monitoring stack updates

- When dashboards show "No data"

- Capacity planning for monitoring infrastructure

Key panels

Scrape success rate

What it shows:

- Percentage of successful metric scrapes

- Per-target breakdown

Healthy state:

- 100% success rate

- Consistent scrape intervals

Warning signs:

- Scrape failures — check target availability

- Timeouts — target may be overloaded

Metrics ingestion rate

What it shows:

- Samples ingested per second

- Trend over time

Use for:

- Capacity planning

- Detecting metric explosion

Storage usage

What it shows:

- VictoriaMetrics disk usage

- Projected capacity based on retention

Warning threshold:

- Alert when > 80% capacity

Active time series

What it shows:

- Number of unique metric series

- Growth trend

Monitoring series growth:

- Sudden spikes may indicate cardinality explosion

- Gradual growth expected as you add targets

Query performance

What it shows:

- Grafana query latency

- Slow queries

Variables

| Variable | Purpose |

|---|---|

cluster_name | Filter by monitored cluster |

Health check commands

Check VictoriaMetrics status

curl http://localhost:8428/api/v1/status/tsdb

Check pgwatch status

docker compose logs pgwatch --tail=50

Check Prometheus/VM targets

curl http://localhost:8428/api/v1/targets

Verify metrics collection

curl 'http://localhost:8428/api/v1/query?query=up'

Common issues

Dashboards show "No data"

-

Check scrape targets are up:

curl http://localhost:8428/api/v1/targets | jq '.data.activeTargets[] | {job: .labels.job, health: .health}' -

Verify metric exists:

curl 'http://localhost:8428/api/v1/label/__name__/values' | jq '.data[]' | grep pg_ -

Check time range alignment

High storage growth

-

Check for cardinality explosion:

curl 'http://localhost:8428/api/v1/status/tsdb' | jq '.data.totalSeries' -

Review high-cardinality metrics:

curl 'http://localhost:8428/api/v1/status/tsdb' | jq '.data.seriesCountByMetricName | to_entries | sort_by(-.value) | .[0:10]' -

Adjust retention if needed:

# docker-compose.yml

victoriametrics:

command:

- "-retentionPeriod=30d" # Reduce from 90d

Scrape timeouts

-

Increase scrape timeout:

# prometheus.yml

scrape_configs:

- job_name: 'pgwatch'

scrape_timeout: 30s -

Check target database performance

-

Review pgwatch resource allocation

Capacity planning

Estimating storage needs

| Factor | Impact |

|---|---|

| Number of databases | Linear increase |

| Scrape interval | Shorter = more data |

| Retention period | Longer = more storage |

| Query cardinality | High = more series |

Formula:

Daily storage ≈ (series_count × samples_per_day × bytes_per_sample) / compression_ratio

Typical values:

- Bytes per sample: ~2-4 (compressed)

- Compression ratio: 10-15x

- Samples per day at 60s interval: 1,440

Scaling recommendations

| Databases | Recommended resources |

|---|---|

| 1-5 | 2 CPU, 2 GiB RAM, 20 GiB disk |

| 5-20 | 4 CPU, 4 GiB RAM, 100 GiB disk |

| 20-50 | 8 CPU, 8 GiB RAM, 500 GiB disk |

Related dashboards

- Target database health — 01. Node Overview